☀️ Happy Wednesday!

Perplexity has been gaining a lot of traction this year, with more and more people talking about it. Despite this, ChatGPT remains the bigger name.

So, which one should you choose—Perplexity or ChatGPT?

How good is Perplexity, really?

And more importantly, how useful is it for designers?

In this article, I’ll dive into a detailed side-by-side comparison of both platforms with their advanced models, using practical use cases in product design.

By the end, hope you have a clearer understanding of their differences and be better prepared to choose the option that works best for you.

⚖️ A Tricky Comparison

Before we start comparing, I have to say that comparing Perplexity and ChatGPT is not exactly an "apples to apples" comparison.

First, their primary purposes are not the same. Perplexity is mostly an AI search engine whereas ChatGPT is mostly a conversational AI chatbot, although they do have many overlaps.

Second, the AI models they rely on are not the same. Perplexity Pro offers access to a wide variety of models, including GPT-4o, Claude Opus, and Sonar 32k, each fine-tuned to work with Perplexity's search capabilities. In contrast, ChatGPT relies solely on OpenAI’s models.

I could have compared Perplexity (using GPT-4o) with ChatGPT (also using GPT-4o), but I wanted to explore more.

From a product designer’s perspective, if I want to use a platform with its best capabilities to perform my tasks, which one should I choose?

So, I chose to test Perplexity (using Claude 3 Opus) vs. ChatGPT (using GPT-4o) instead, because:

I’m curious to see how powerful Claude 3 Opus is, as it’s considered one of the most advanced AI models on Perplexity. (Claude 3.5 Sonnet is theoretically more advanced, but feedback suggests it’s not yet as effective as Opus in Perplexity’s environment.)

I am a heavy user of ChatGPT, specifically its GPT-4o model. (Although o1-preview is theoretically more advanced, I didn’t choose it because it is noticeably slower than 4o and currently has a limit of 50 messages per week.)

Note that I am using ChatGPT Pro and Perplexity Pro for this comparison.

📋 Comparison Overview

I tried to create a scenario that resembles a real-world situation:

Let’s say I am a product designer who need to work on a project—Quick Survey Generator. I asked the same set of questions to both Perplexity and ChatGPT, covering a variety of tasks to test their performance:

Product Discovery—Asking them to navigate ambiguity and recommend what steps I should take next.

Competitive Analysis—Asking them to research similar products on the market.

Interview Questions—Asking them to generate effective questions to better understand the users.

Design Feedback—Asking them to provide actionable feedback on a user interface.

Image Analysis—Asking them to describe a user interface in detail.

Then, I’ll present the results in the following format:

My Prompt

Perplexity’s Response

ChatGPT’s Response

Winner Announcement

Curious about the results? Let’s dive in!

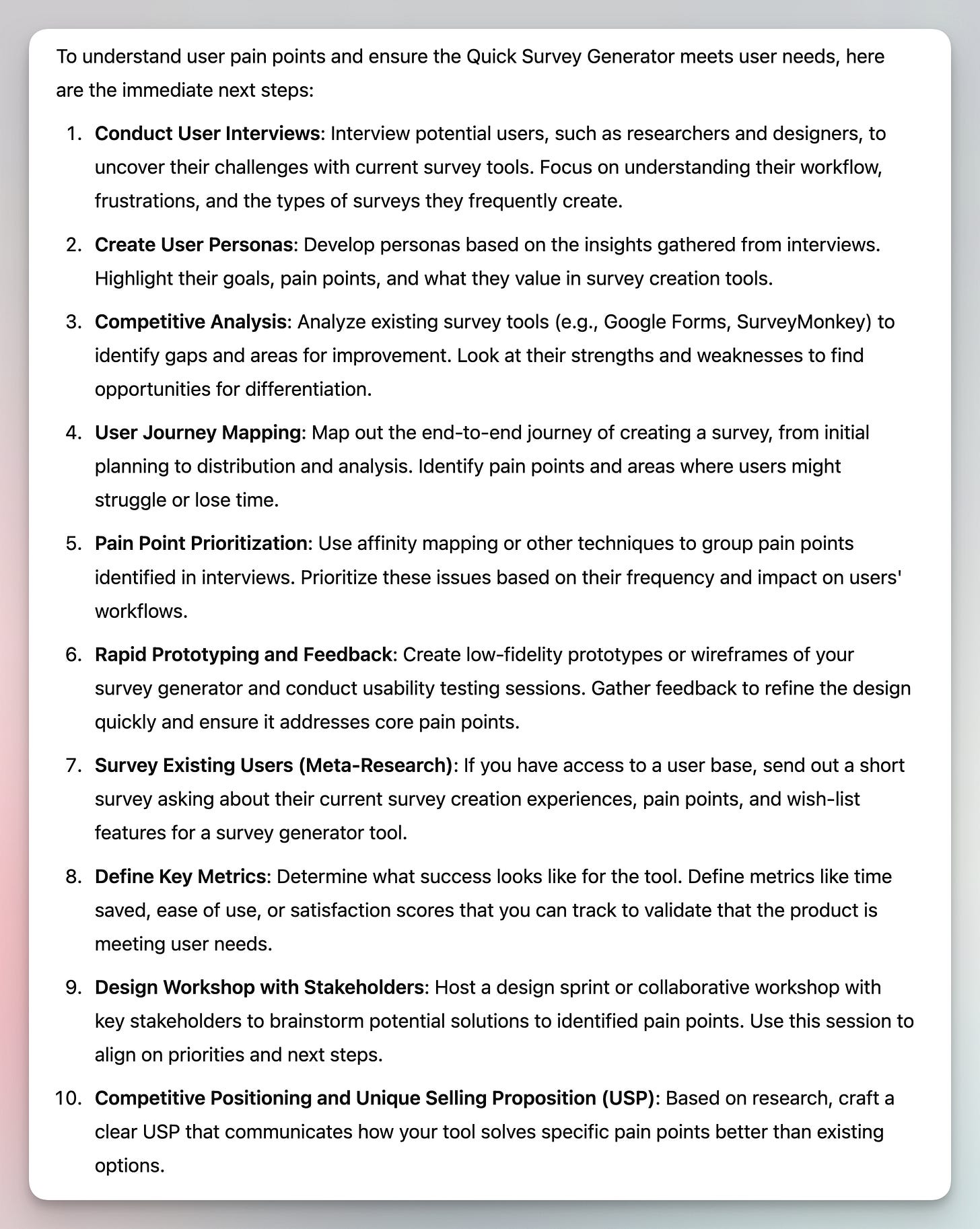

1️⃣ Product Discovery

My Prompt

“You are a seasoned product designer working on a tool called Quick Survey Generator, which helps user researchers and designers create surveys quickly with the best questions. What are the helpful immediate next steps to understand user pain points and ensure the product meets their needs?”

Perplexity’s Response

ChatGPT’s Response

Takeaway

Winner: Perplexity (using Claude 3 Opus)

Reason: Perplexity’s response is slightly more actionable and focused. In contrast, ChatGPT’s response feels broader and more generic. In terms of speed, both were almost the same.

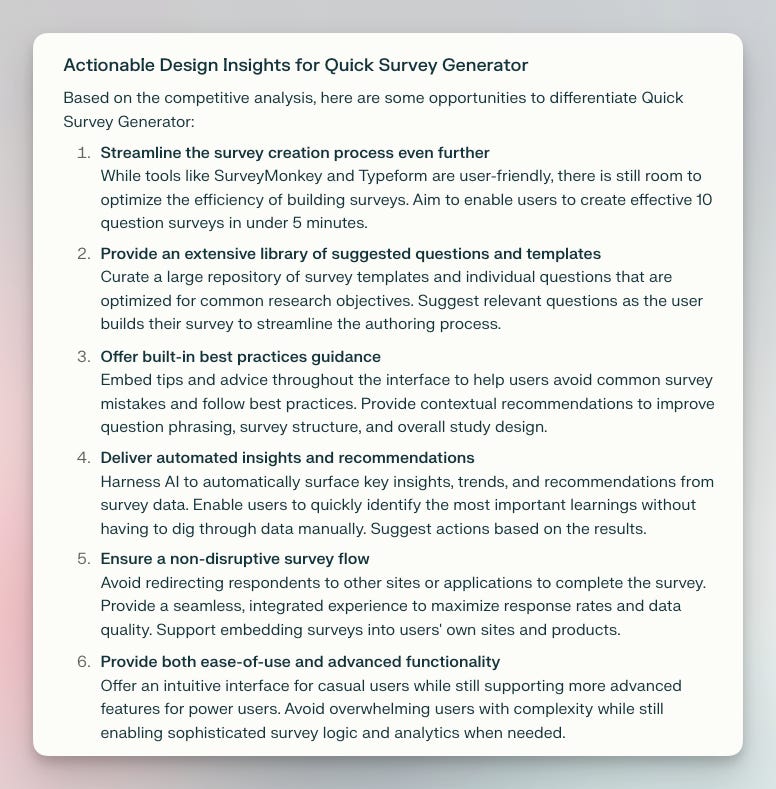

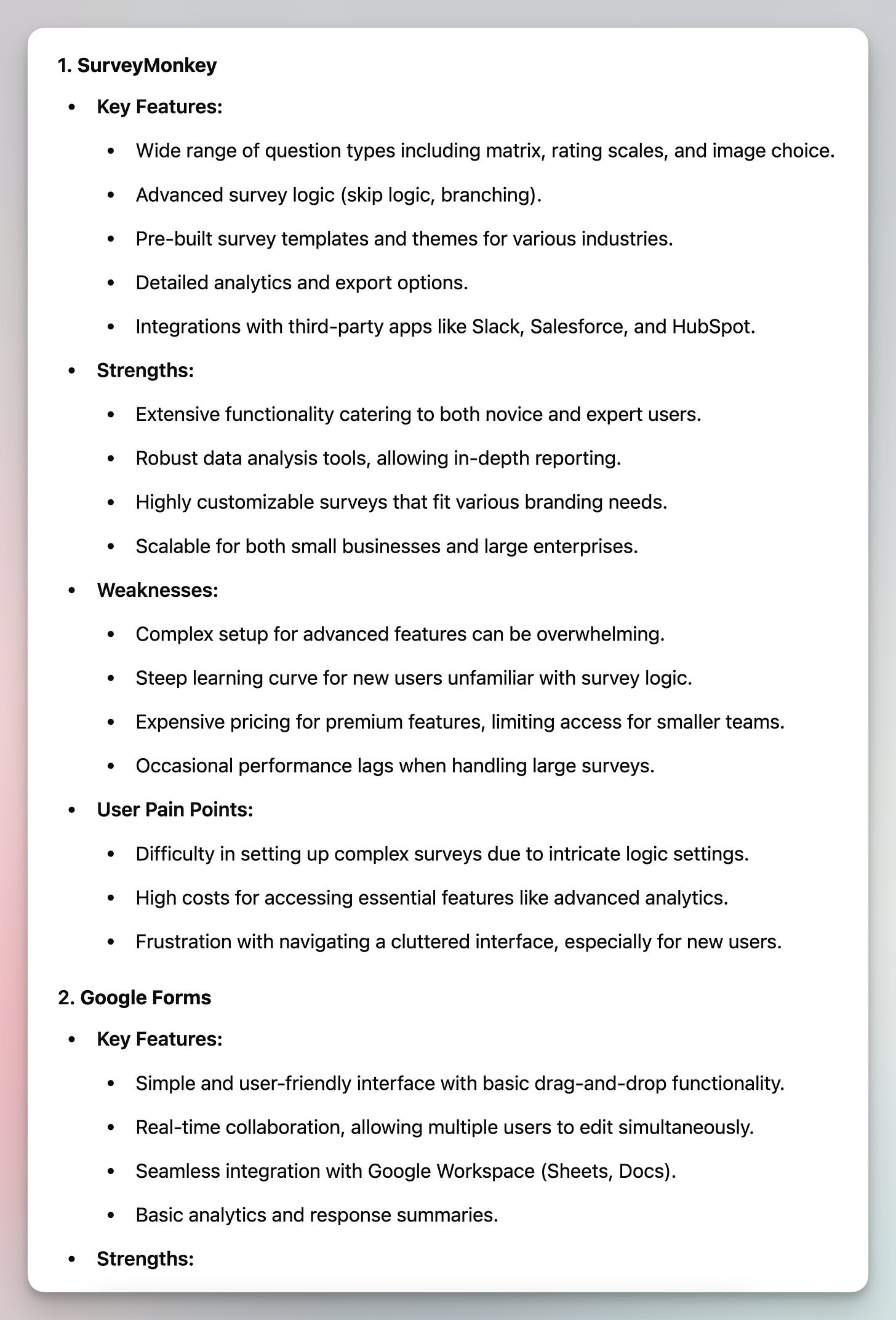

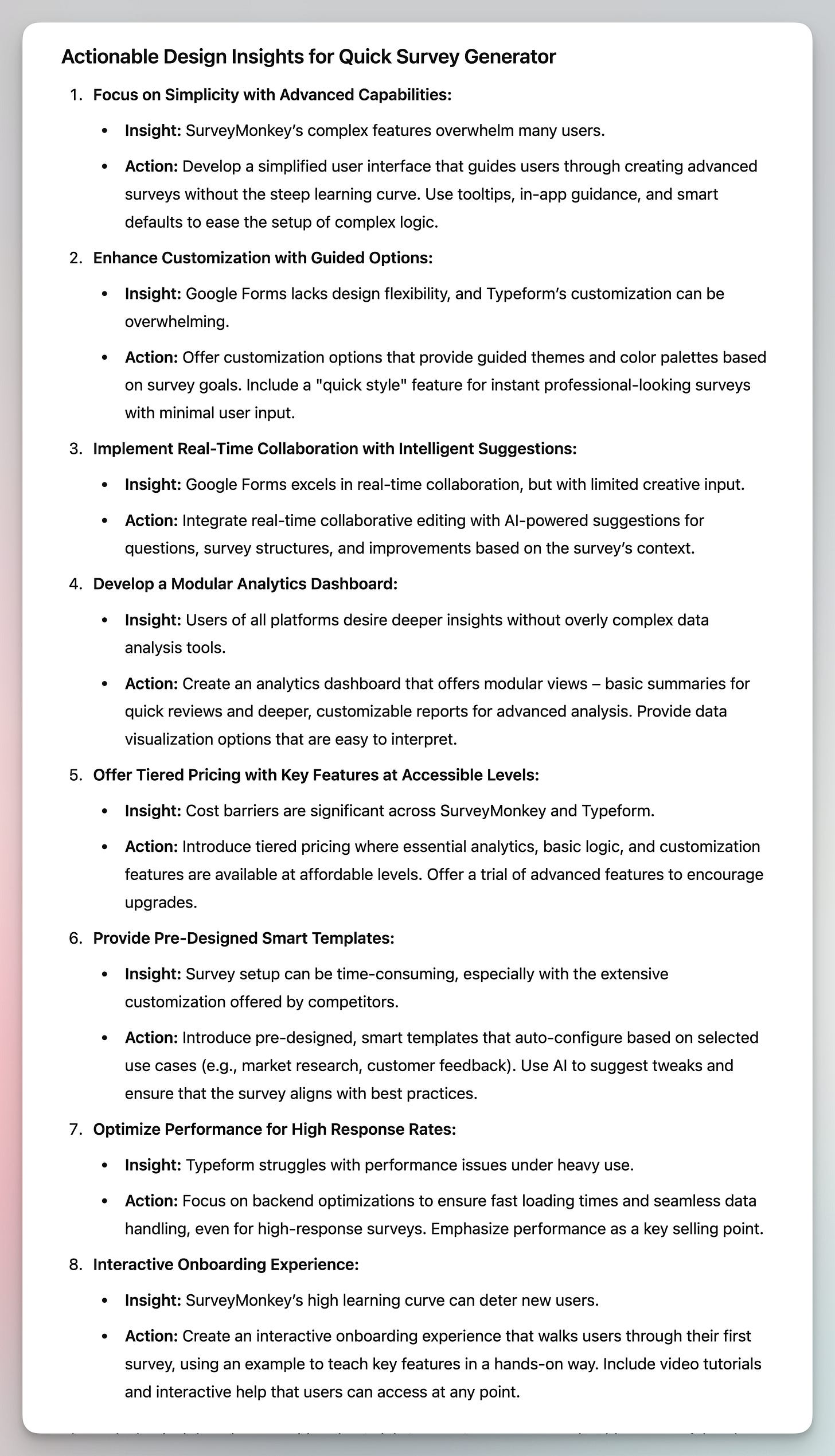

2️⃣ Competitive Analysis

My Prompt

“Conduct a competitive analysis of popular survey tools in the market. Identify key features, strengths, weaknesses, and user pain points. Provide actionable design insights to differentiate our Quick Survey Generator, focusing on areas where we can improve user experience, streamline the survey creation process, and address unmet needs.”

Perplexity’s Response

ChatGPT’s Response

Takeaway

Winner: Perplexity (using Claude 3 Opus)

Reason: The responses were actually quite close, but I still chose Perplexity for its advantages in search-related needs:

Perplexity uses real-time web search, while the current ChatGPT (GPT-4o) has a knowledge cutoff date of September 2023. This means ChatGPT doesn’t have information about events or updates that have occurred after that time, which is a problem when I need the latest information.

Perplexity also offers extended search options related to my queries, as shown below. It provides numerous options, such as steps taken by the model, related topics, and relevant visuals.

I found the sources Perplexity provided next to each response very helpful, whereas I struggled to get ChatGPT to provide sources for its responses.

3️⃣ Interview Question

My Prompt

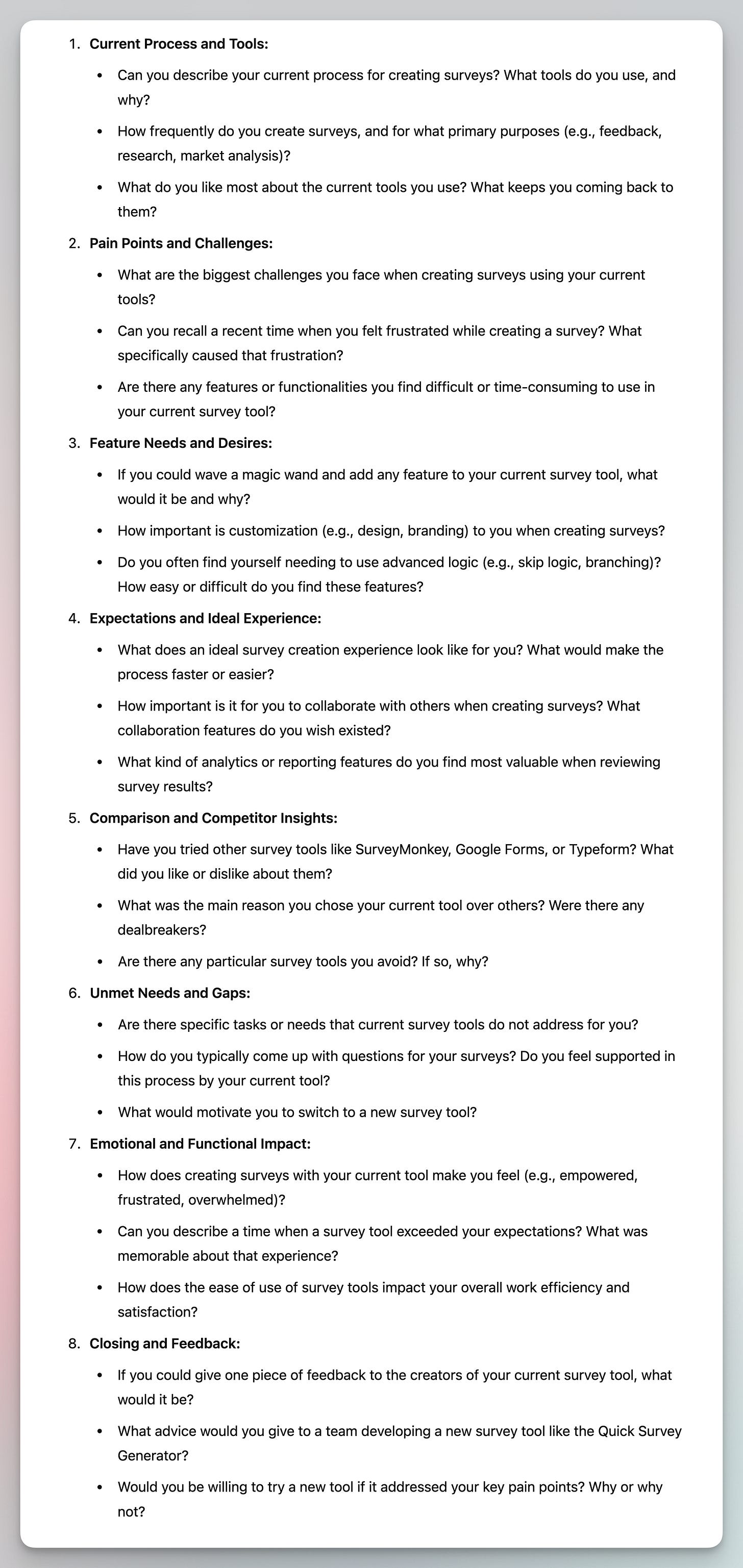

“Generate a set of interview questions to ask potential users of our Quick Survey Generator tool, which is designed to help researchers and designers create surveys quickly with the best questions. The questions should aim to uncover user pain points, needs, and expectations regarding survey creation tools.”

Perplexity’s Response

ChatGPT’s Response

Takeaway

Winner: Perplexity (using Claude 3 Opus)

Reason: It provides a better flow with questions that feel more contextual. It also addresses user reactions to the Quick Survey Generator concept, making it more effective to get actionable insights. The inclusion of quantitative details like “on a scale of 1-10” and “the top 3 things” also adds value.

4️⃣ Design Feedback

My Prompt

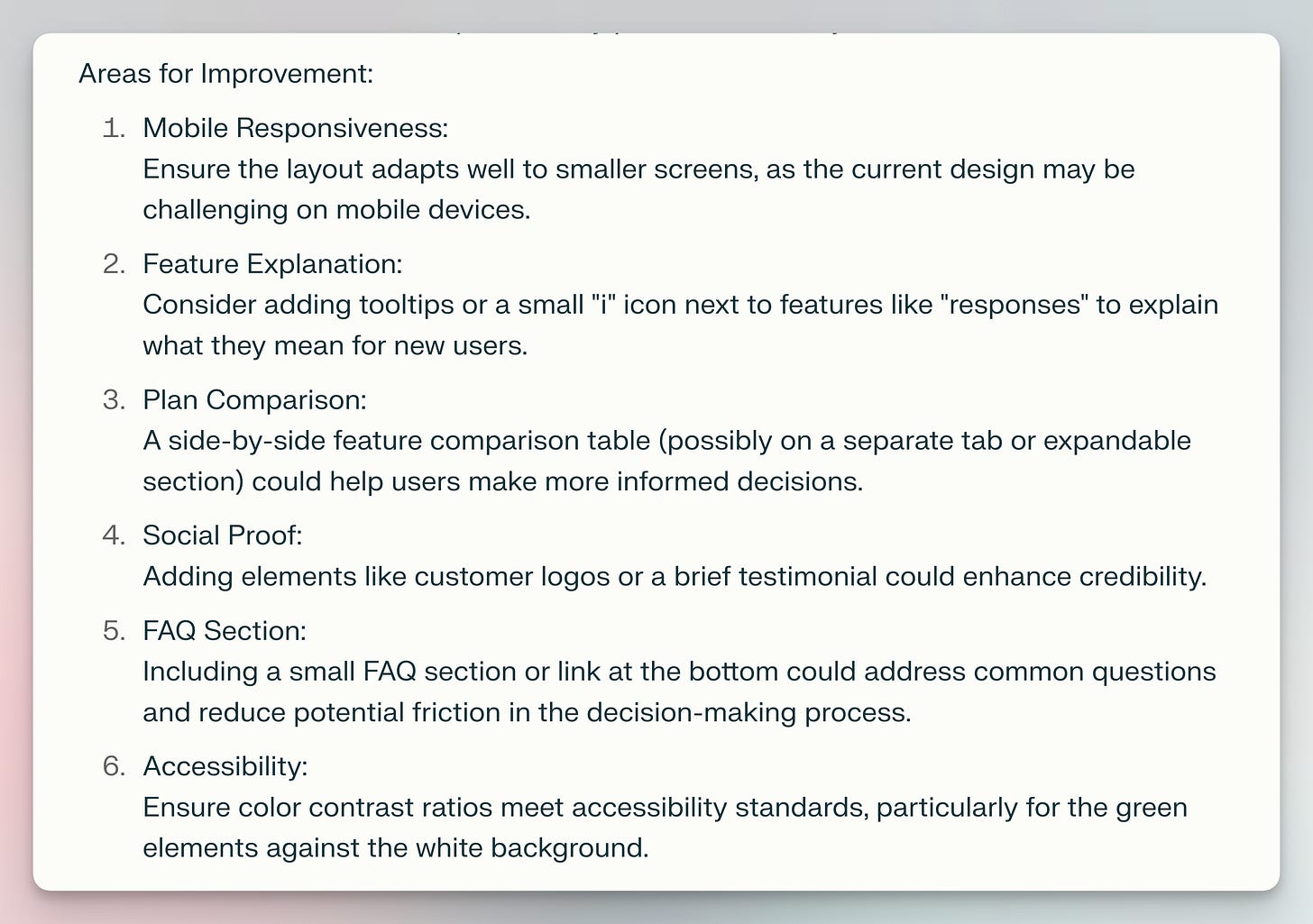

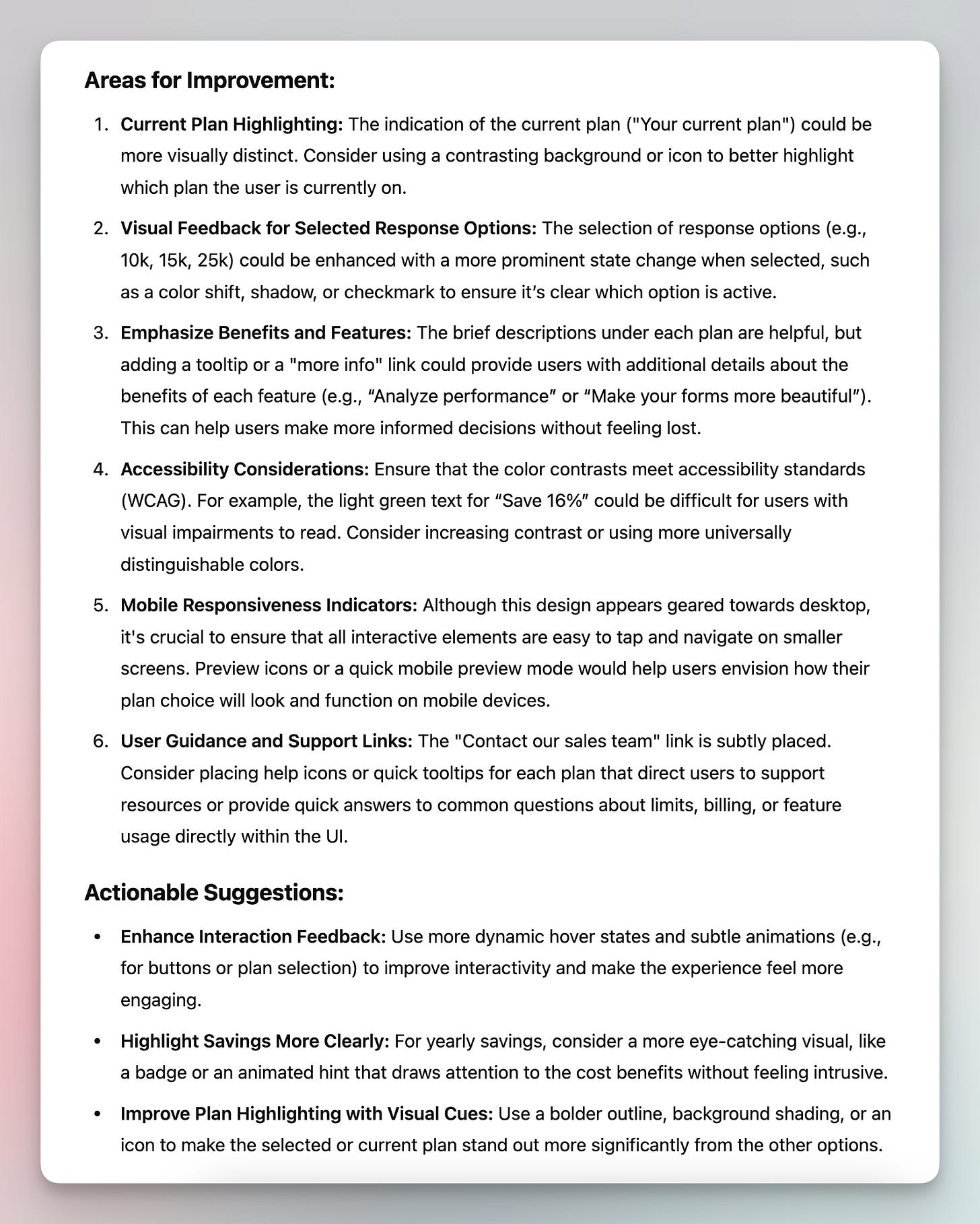

“Act as a seasoned product designer and provide detailed feedback on the user interface design provided.”

Perplexity’s Response

ChatGPT’s Response

Takeaway

Winner: ChatGPT (using GPT-4o)

Reason: I feel that Perplexity responds more like a robot with concise yet dry answers, whereas ChatGPT behaves more like an assistant, providing suggestions with greater detail and creativity.

5️⃣ Image Analysis

My Prompt

"Analyze the information shown in the image provided and describe it in detail.”

(Had to skip the screenshot due to article length limitations…I used a screenshot of Typeform’s Pricing Page.)

Perplexity and ChatGPT’s Responses

(Had to skip the screenshots due to article length limitations…)

Takeaway

Winner: Tie

Reason: Perplexity and ChatGPT were both able to provide similar information with good accuracy and a comparable level of depth.

💡 Summary

The quality of responses are generally close. Perplexity gives off a concise and factual vibe, although it can feel a little robotic. It’s a great fit for academic research, when you want to gather up-to-date information and synthesize it. On the other hand, ChatGPT feels more like a versatile assistant with more creativity.

Perplexity’s interface offers many features. For example, there is an option to switch to “Writing Mode” if you want Perplexity to write more creatively without referencing real-time information online, as shown below. Sometimes, however, it feels more than I need compared to ChatGPT’s simple interface.

ChatGPT is more conversational and easier to interact with. The response it generates also feels creative and diverse. ChatGPT also has cool features such as custom GPTs, voice mode (on app), which perplexity doesn’t have. ChatGPT’s longer context window also adds value. When the conversation gets lengthy, ChatGPT appears to remember earlier conversations better.

So which one would I use as a designer?

I would still primarily use ChatGPT due to my familiarity with it and its well-rounded capabilities. After all, I use ChatGPT for a variety of tasks, and it makes sense to stick to one primary platform rather than frequently switching. That said, I’ll definitely start using Perplexity more often, particularly in the following scenarios:

Search for and read up-to-date information online.

Conduct research-related tasks and synthesize insights from different sources.

Not satisfied with ChatGPT’s response, even after switching to o1-preview.

Test new Claude models. (Ultimately, I need to try Claude on it’s native platform someday—Claude.ai)

That’s it for this week. Thanks for reading.

Let me know if you have any questions.

—Xinran

P.S. Today’s newsletter broke two records:

It’s the longest piece I’ve ever written, and I struggled to trim it down.

It’s also the one I’ve spent the most time writing. I expected to finish it in 3 hours, but it took me the whole Saturday…

Love this. Thank you!