Four months ago, I did a side-by-side comparison on Perplexity and ChatGPT.

Today, I’ll share a similar experiment with Claude and ChatGPT, testing them with practical use cases in product design.

The test will feature Anthropic’s latest Claude 3.5 Sonnet against OpenAI’s GPT-4o model.

By the end of this comparison, I hope you’ll have a better understanding of their differences and feel more confident in choosing the option that best suits your needs.

📋 Comparison Overview

I used the same scenario as in my previous article, focusing on a real-world situation:

Imagine I’m a product designer working on a project called Quick Survey Generator. To evaluate their performance, I posed the same set of questions to both Claude and ChatGPT, covering various tasks:

Product Discovery—Navigate ambiguity and recommend what steps I should take next.

Competitive Analysis—Research similar products on the market.

Interview Questions—Generate effective questions to better understand the users.

Design Feedback—Provide actionable feedback on a user interface.

Image Analysis—Describe a user interface in detail.

Wireframe Generation—Create wireframes based on design requirements.

Next, I’ll present the results in the following format:

My Prompt

Claude’s Response

ChatGPT’s Response

Winner Announcement

Curious about the results? Let’s jump in!

1️⃣ Product Discovery

My Prompt

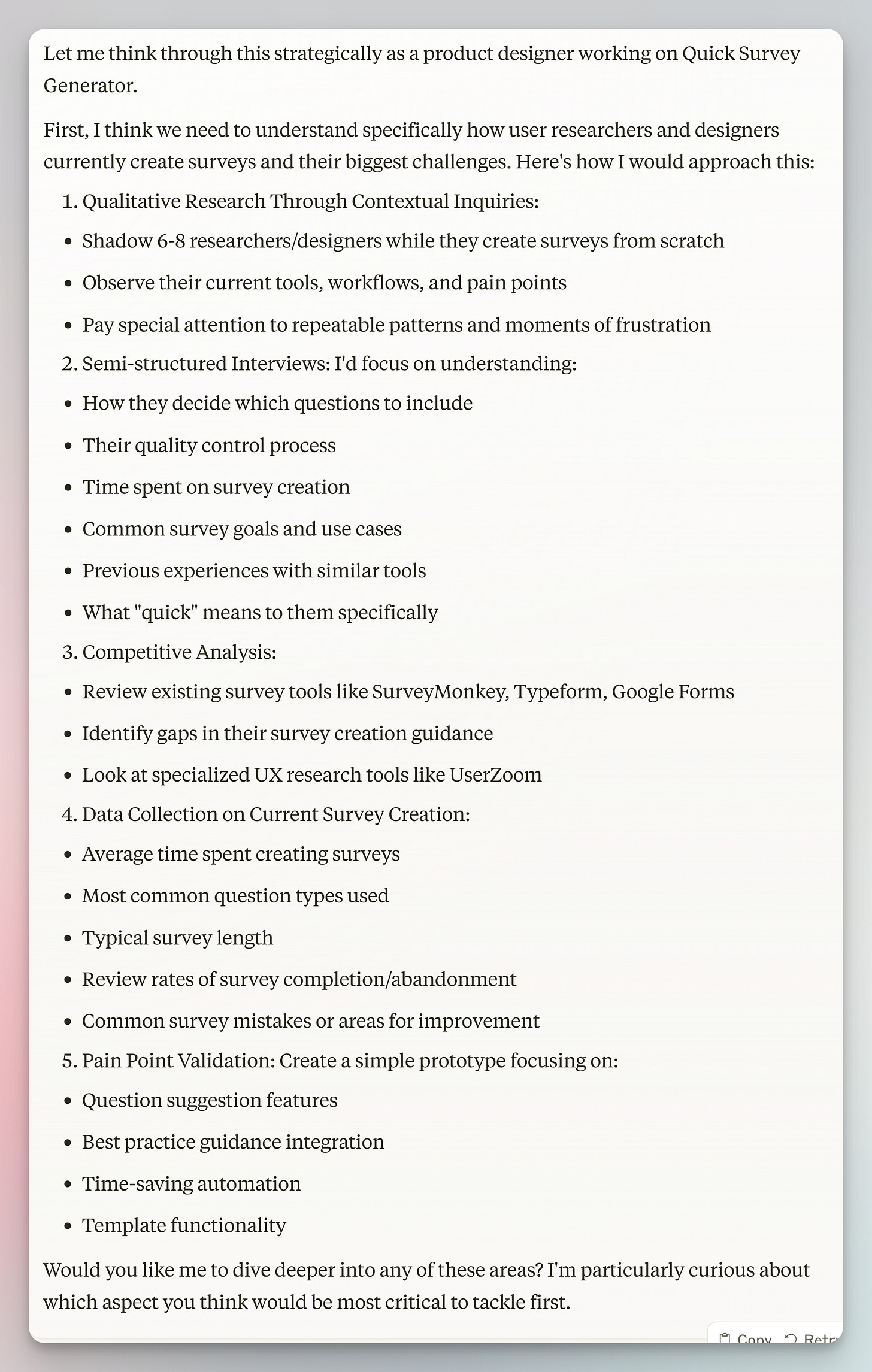

You are a seasoned product designer working on a tool called Quick Survey Generator, which helps user researchers and designers create surveys quickly with the best questions. What are the helpful next steps to understand user pain points and ensure the product meets their needs?Claude’s Response

ChatGPT’s Response

Takeaway

Winner: Claude (using Claude 3.5 Sonnet)

Reason: Despite its concise format, Claude’s response is apparently more relevant and actionable than ChatGPT’s response.

2️⃣ Competitive Analysis

My Prompt

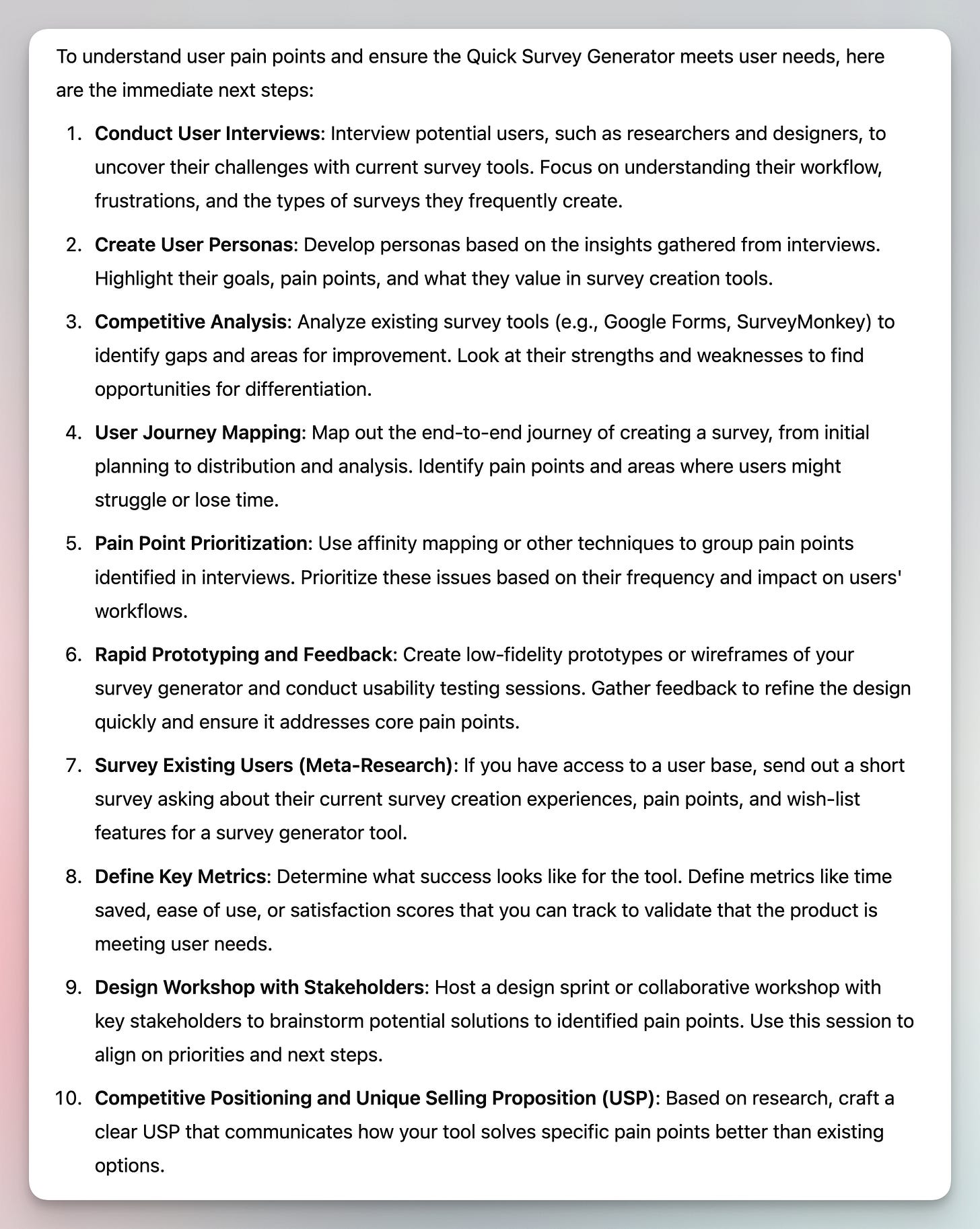

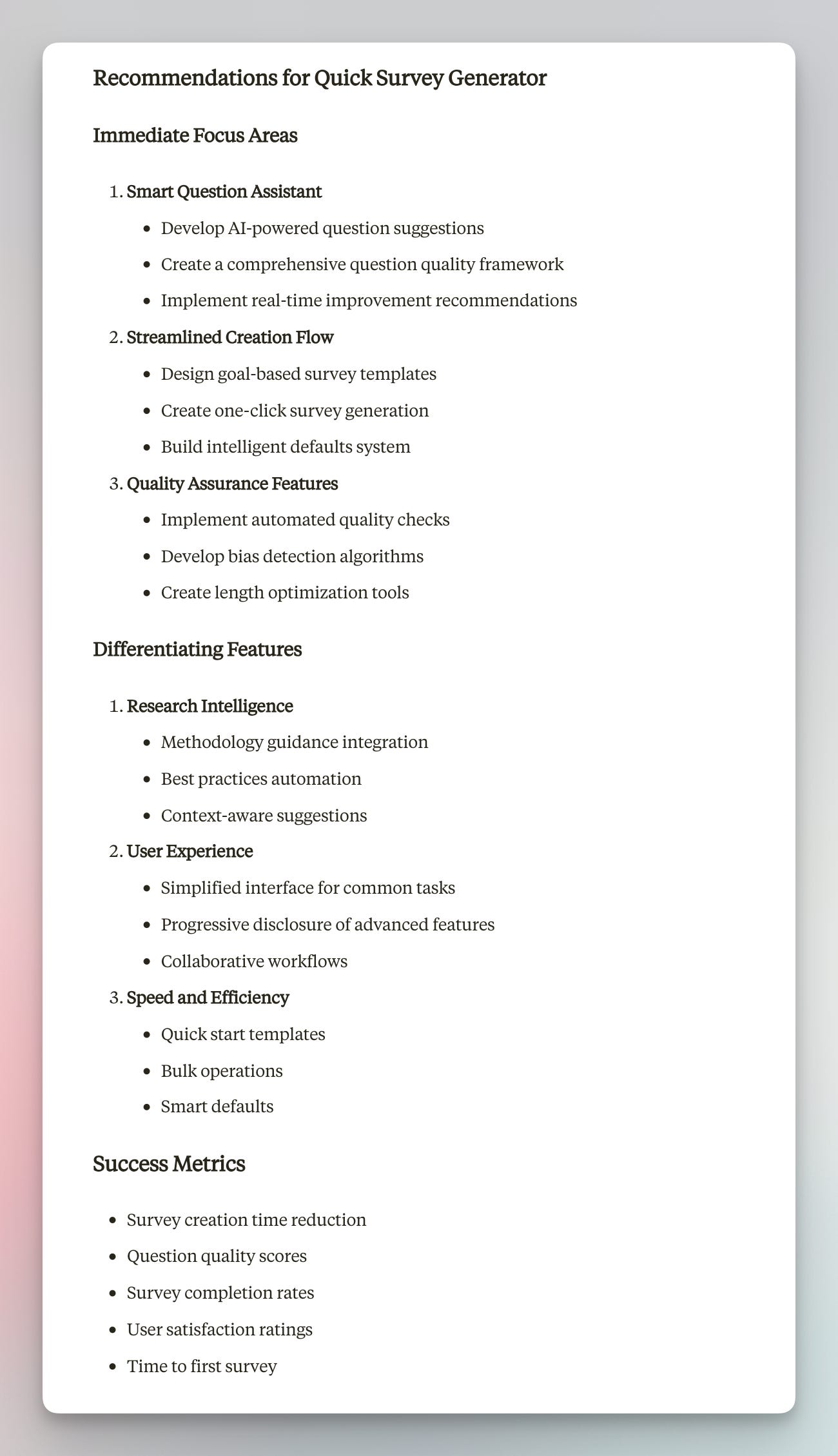

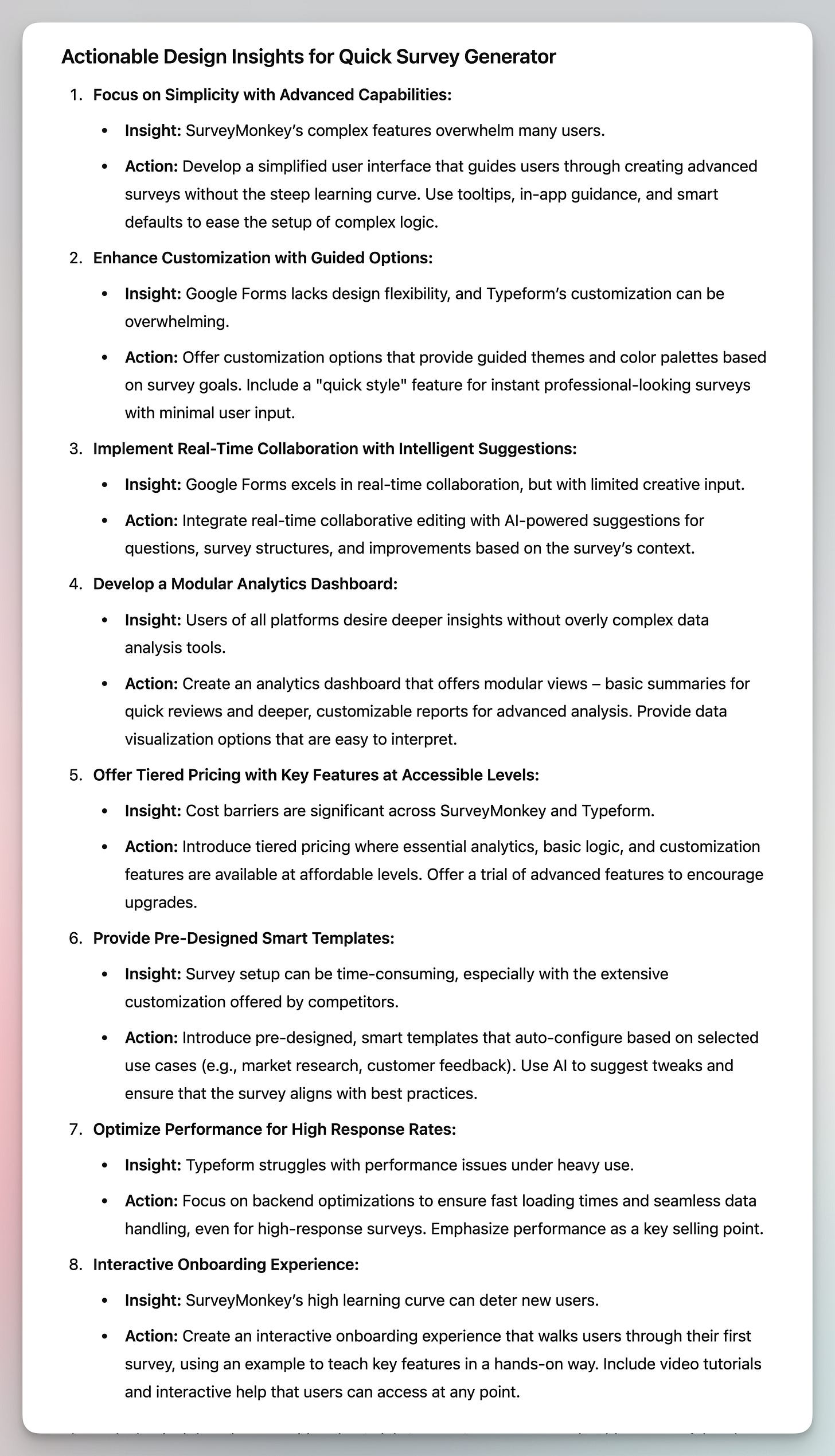

Conduct a competitive analysis of popular survey tools in the market. Identify key features, strengths, weaknesses, and user pain points. Provide actionable design insights to differentiate our Quick Survey Generator, focusing on areas where we can improve user experience, streamline the survey creation process, and address unmet needs.Claude’s Response

(Only sharing partial response:)

ChatGPT’s Response

(Only sharing partial response:)

Takeaway

Winner: Tie

Reason: The competitive analysis that Claude and ChatGPT did was almost the same.

But their actionable insights covered different information.

Because of the article length limit, I couldn’t include the snapshot of the full response—Claude actually generated more than what I showed above, such as a helpful section named “Opportunity Areas”.

Claude’s response was concise yet to the point. It also covered more angles than ChatGPT, which I found helpful.

And when I asked a follow-up question “Can you elaborate on the section of "User Experience"?, Claude generated a refreshing deeper dive for me that included:

Current Market Pain Points

Recommended UX Improvements

Key UX Principles

Priority Matrix (High, Medium, Low)

Success Metrics for UX Improvements

On the other hand, ChatGPT’s response was more descriptive, as if someone was explaining to me.

3️⃣ Interview Question

My Prompt

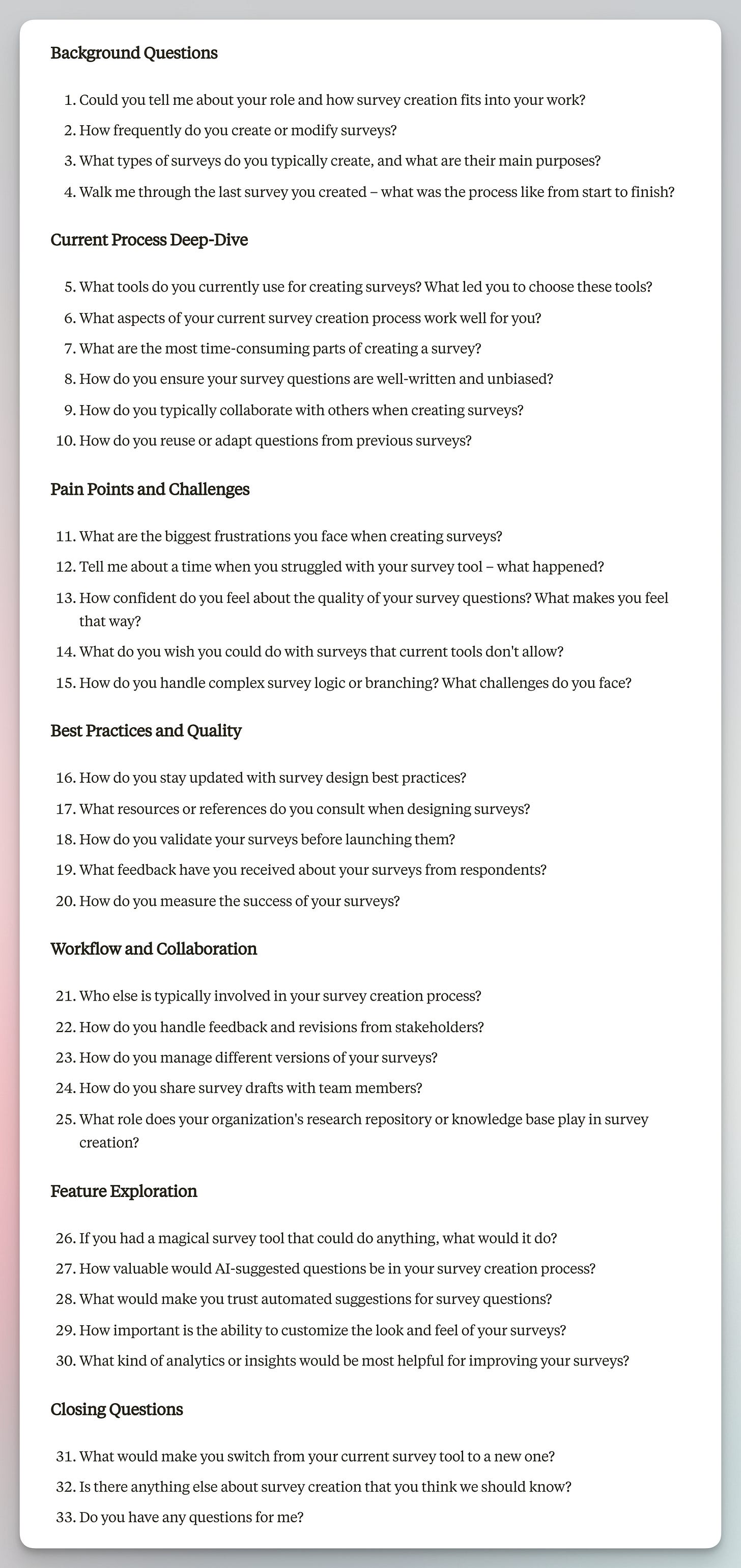

Generate a set of interview questions to ask potential users of our Quick Survey Generator tool, which is designed to help researchers and designers create surveys quickly with the best questions. The questions should aim to uncover user pain points, needs, and expectations regarding survey creation tools.Claude’s Response

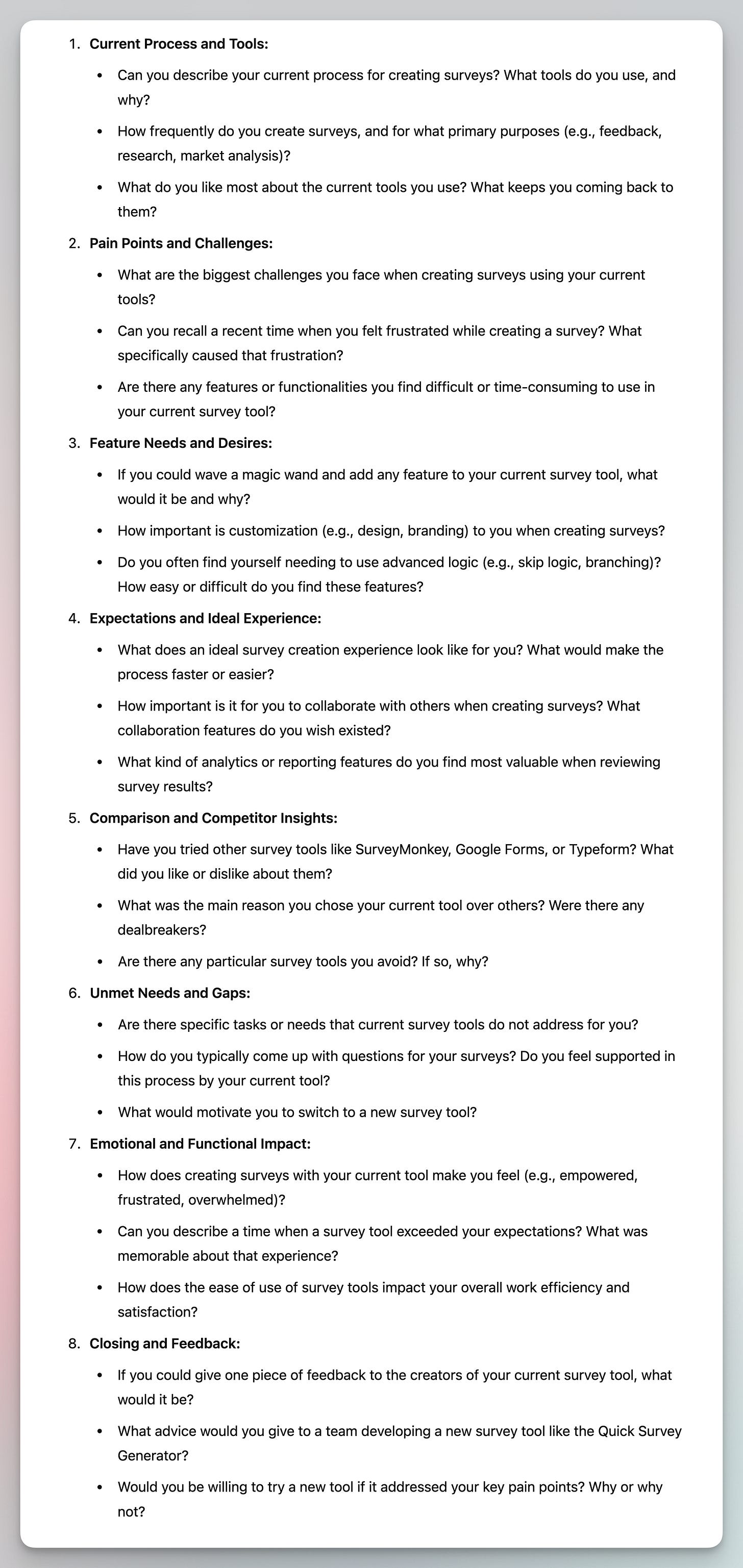

ChatGPT’s Response

Takeaway

Winner: Claude (using Claude 3.5 Sonnet)

Reason: Again, I thought Claude’s response was slightly better, while several questions from ChatGPT just didn’t seem reasonable to me, such as:

How does creating surveys with your current tool make your feel?

How does the ease of use of survey tools impact your overall work efficiency and satisfaction?

4️⃣ Design Feedback

My Prompt

Act as a seasoned product designer and provide detailed feedback on the user interface design provided.(Same as what I shared in my newsletter on Perplexity vs. ChatGPT, I attached a screenshot of Typeform’s Pricing Page.)

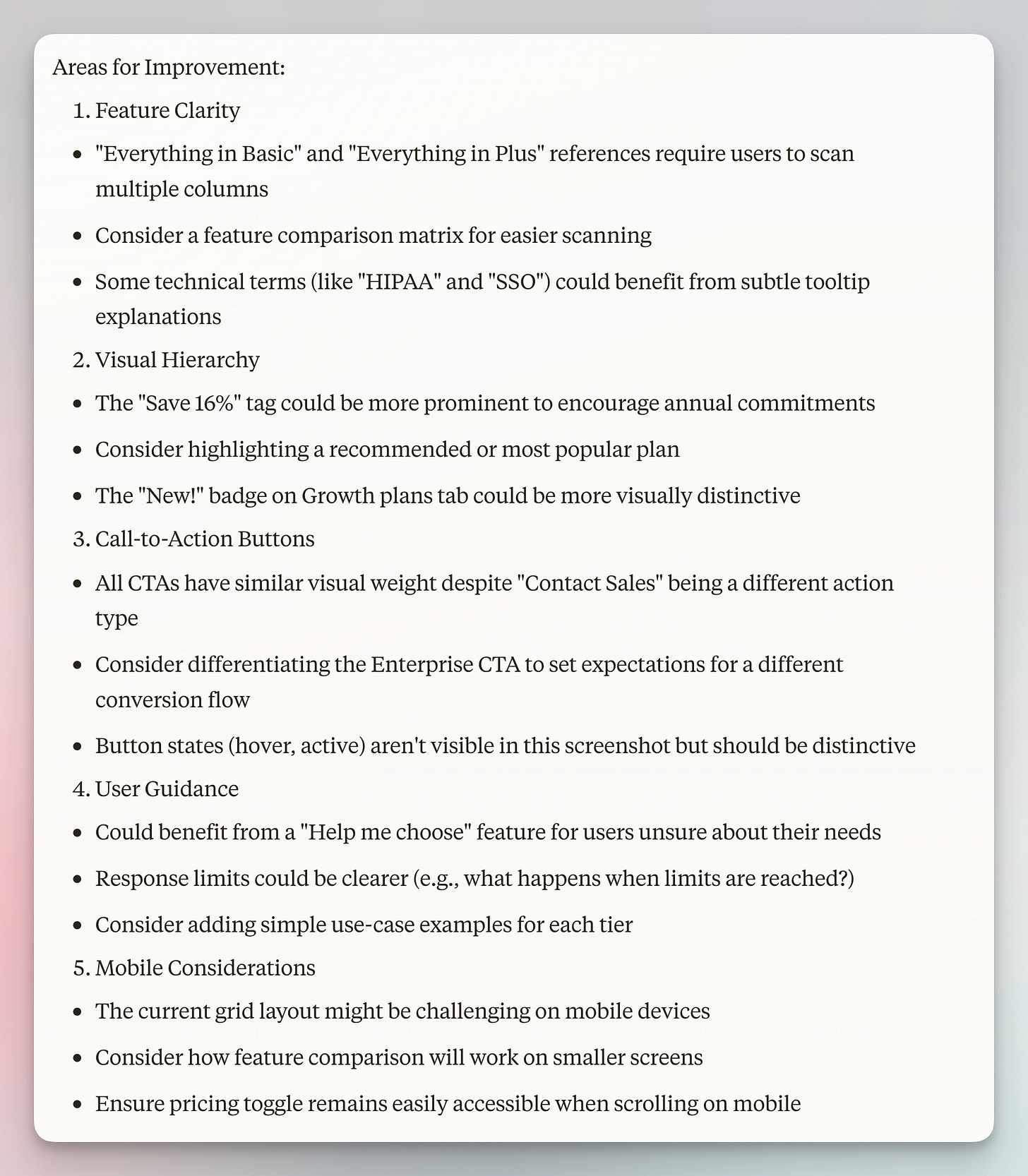

Claude’s Response

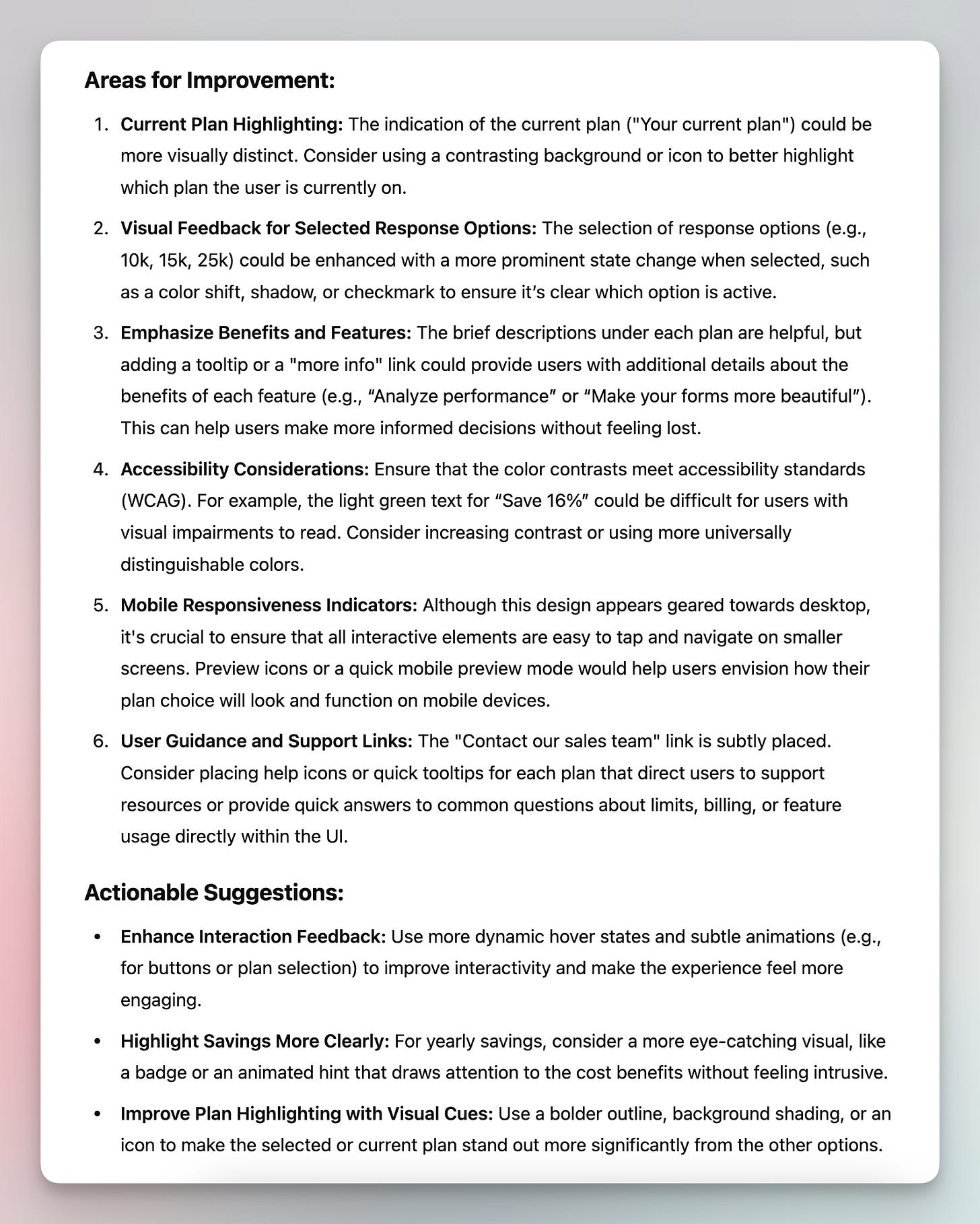

ChatGPT’s Response

Takeaway

Winner: Tie

Reason: Claude brought up some specific good points, such as mobile responsiveness challenges and jargons like HIPAA, while ChatGPT was more descriptive in providing detailed suggestions, such as considering a more prominent state change like a color shift, shadow, or checkmark.

5️⃣ Image Analysis

My Prompt

Analyze the information shown in the image provided and describe it in detail.(I used the same screenshot from Prompt #4, and due to the article length limitation, I’ll skip the responses here and jump directly to my takeaway.)

Takeaway

Winner: Tie

Reason: Claude and ChatGPT's responses were quite similar, both providing a structured and accurate description of the details shown in the user interface snapshot I provided.

6️⃣ Wireframe Generation

My Prompt

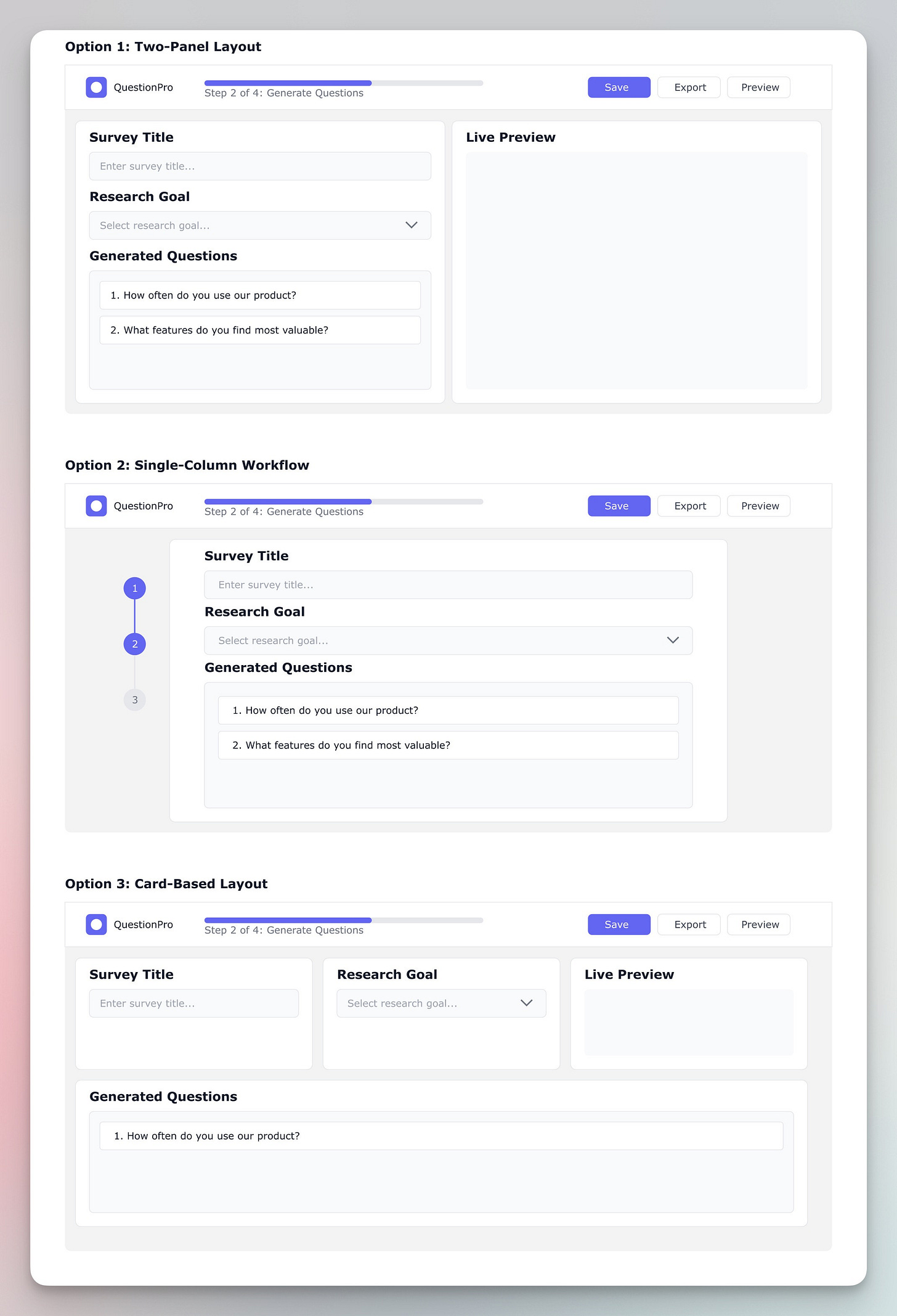

Design three high-fidelity wireframe options for a question generator tool tailored for desktop view.

- Option 1: Two-panel layout (editing + preview)

- Option 2: Single-column workflow

- Option 3: Card-based modular layout

Layout:

- Navigation: Progress, menu, logo

- Input: Survey title + research goal selector

- Display: Generated questions (editable)

- Actions: Save, preview, export

- Preview: Live survey previewClaude’s Response

ChatGPT’s Response

Takeaway

Winner: Claude (using Claude 3.5 Sonnet)

Reason: This was the easiest win of all. Claude generated a code-backed design and leveraged its Artifact feature to display it. The quality was impressive.

In contrast, ChatGPT’s response was simply an expanded version of my text prompt.

If I “force” ChatGPT to generate an image, this was the result from DALL·E. It was not useful at all.

💡 Summary

Based on the tasks I listed above, I gave Claude the edge over ChatGPT as I found its answers more helpful in general.

The two tools have different styles in their responses:

Claude has a concise, bullet-pointed, and well-structured style while covering a good variety of angles.

ChatGPT has a more descriptive vibe, explaining things in a conversational, human-like tone.

I can see scenarios where ChatGPT would be a better fit, such as creative writing, ideating, and particularly when a narrative explanation is needed. It often surprises me with its ability to explain things in an engaging way.

I still like to use ChatGPT as a general-purpose assistant, but Claude's response in this experiment definitely came as a pleasant surprise and I’ve been using Claude more and more.

That’s it for this week. Thanks for reading.

Let me know if you have any questions.

—Xinran

P.S. I was shocked to see that around 1k people subscribed to Design with AI in the past 30 days. Welcome and thank you!

Like this detailed comparison, but love your analysis! How would Claude and ChatGPT rate each others responses?

I wonder if you'll get better quality wireframes in Claude. If you first ask it to generate a design spec and then feed that in order to create wireframes?

This approach works great with bolt to create simple prototypes. But I've never tried it with Claude for wireframes. I'll give it a try this weekend.